- Golden Globe Awards

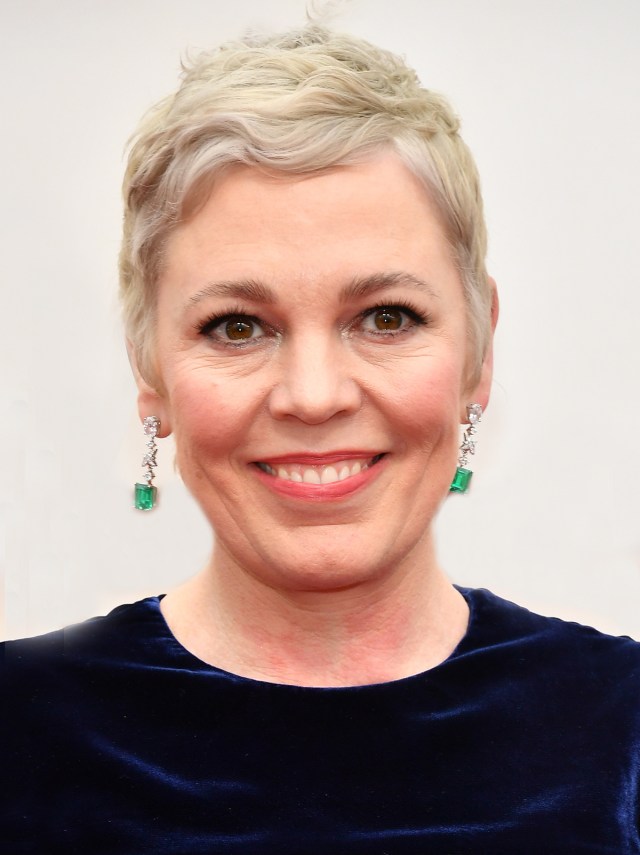

Nominee Profile 2021: James Corden, “The Prom”

The multi-talented James Corden, who is an actor, comedian, singer, writer, producer and television host, always credited the upbringing by his social worker mom and his musician father as the secret to his success. Growing up in Hazlemere, Buckinghamshire with two sisters in what he described as a “glorious family environment,” Corden admitted that he was the quiet one out of the three of them.